The goal of this review is to provide an overview of current robotic approaches to precision weed management. This includes an investigation into applications within this field during the past 5 years, identifying which major technical areas currently preclude more widespread use, and which key topics will drive future development and utilisation.

Studies combining computer vision with traditional machine learning and deep learning are driving progress in weed detection and robotic approaches to mechanical weeding. Integrating key technologies for perception, decision-making, and control, autonomous weeding robots are emerging quickly. These effectively save effort while reducing environmental pollution caused by pesticide use.

This review assesses different weed detection methods and weeder robots used in precision weed management and summarises the trends in this area in recent years. The limitations of current systems are discussed, and ideas for future research directions are proposed.

Avoid common mistakes on your manuscript.

According to the United Nations (UN), all countries must consider and address a common problem; global population growth, which is expected to reach nearly 10 billion by 2050 [1]. Population growth requires farmers to adapt in the control, management, and monitoring of their farms to meet the growing demand for food [2]. According to [3••], food yields need to increase by 70%. In addition, billions of people worldwide are at risk from unsafe food, with millions becoming sick and hundreds of thousands dying annually [4]. This illustrates the need for higher requirements in agricultural production which is the source of the food industry. However, many problems need resolving, such as the reduction of cultivated land and loss of available labour. Other issues, including climate change [5], water pollution [6], and weeds, also affect agricultural productivity.

Weeds are unwanted plants that grow on farmland and compete with crops for nutrients, space, and sunlight. If not removed, they obstruct crop growth, causing a reduction in crop yield and consequently, a reduction in profit for farmers [7]. Therefore, weed control is an important means to improve crop productivity. Currently, large-scale spraying of pesticides is the most widely used weed control method, but this wastes resources and causes environmental pollution [8]. Therefore, the design of a weeding system that reduces pesticide use is urgently needed. Currently, weed robots are designed based on real-time image detection as the early identification and control of weeds is paramount. The experiment in [9] investigated the significance of late intervention treatment time commencing at week 6, which resulted in a weed survival probability of 0.54±0.08 versus 0.24±0.18 for earlier intervention at week 4.

Typical site-specific weed management (SSWM) includes four processes:

For data collection, optical sensors are the most widely used technology in weed recognition [10]. Various types of sensors, including machine vision, visible and near infrared (Vis–NIR) spectroscopy [11, 12], multi-/hyper-spectral images [13,14,15,16], and distance sensing techniques [17], have been tested. These sensors can be grouped into two categories: airborne remote sensing and ground-based techniques. The former commonly uses sensors mounted on balloons, airplanes, unmanned aerial vehicles (UAV) [18,19,20,21], and satellites for data acquisition. The obtained images are then analysed off-line to generate weed maps for subsequent SSWM operations. Remote sensing techniques are helpful in map-based SSWM as they are well-suited to larger areas, but are not a real-time process and have a lower spatial resolution compared to ground-based techniques. Ground-based techniques collect and promptly process weed information enabling real-time SSWM operation. Compound signalling is another method [22, 23] which can be applied to crops and weed detection.

Weed detection plays a key role in SSWM, since it provides information necessary for successive procedures and it determines the upper weeding effect limit. Some early studies have focused on the efficacy and reliability of using different light spectra and simple image processing techniques [24]. Thanks to the advancement of sensors, computational power, and algorithms, several breakthroughs have been made in weed detection within the past few years. Detection is based on using multiple labelled plant images to teach a model to distinguish the desirable crops from weeds, recognise patterns in weed distribution, and identify weed edges/boundaries.

Agriculture still relies heavily on a human workforce, which can be affected by health problems such as the worldwide public health crisis generated by the coronavirus pandemic (COVID-19). In addition to causing many deaths around the world, the pandemic has imposed several forms of restriction on agricultural activities including weed management. Thus, an effective weeding method is urgently needed. In [25], Lati et al. compared Robovator weed control with hand weeding, and results showed that robotic cultivators can reduce the dependency on hand weeding. Technological advancements and price reductions in these types of machines will improve their weed removal efficacy. In [26], Kirtan et al. studied different specialist and wireless systems employed in agricultural sectors including weed management. Results showed that machine learning is necessary for sustainable development in the farming sector. In [27], Zha conducted a review of AI use in soil and weed management with IoT technologies, and demonstrated that computer vision algorithms, such as deep belief networks (DBN) and convolution neural networks (CNN), show promise in fruit classification and weed detection in complex environments. More specifically, environments with varying ambient lighting, background complexities, capture angle, and fruit/weed shapes, and colours were explored.

Due to the very complex agricultural environment, including but not limited to illumination, occlusion, and different growth stages under field conditions, the effect of weed identification is not satisfactory. To solve the problems that arise, transfer learning, model reuse, self-supervised, and even unsupervised methods are employed. Most methods employ supervised learning and require large amounts of annotated data for optimal performance. However, due to the complex agricultural environment and the time-consuming image annotation, there are very few public image datasets.

This review is organised as follows: a brief overview of weed detection based on machine and deep learning is presented in “Weed Detection Methods.” “Currently Emerging Weeding Robots” describes varied types of weeding robots. An overall discussion and conclusions are presented in “Discussion,” which also considers the remaining challenges, limitations, and recommendations. Finally, “Conclusion” concludes this review.

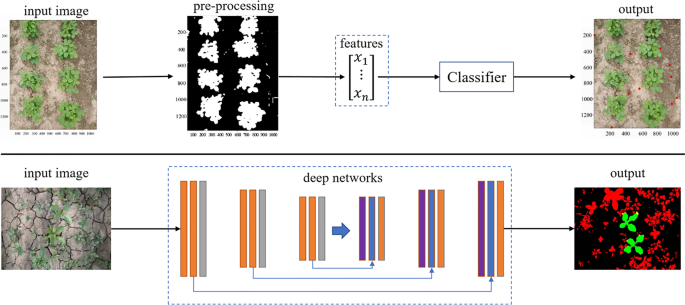

As the cost of labour has increased, and people have become more concerned about health and environmental issues, site-special weed management (SSWM) has become attractive. To develop SSWM, the essential first step is how to detect and recognise. Weed detection methods can be divided into two parts: machine learning (ML) and deep learning (DL). Figure 1 shows the difference between ML and DL. In this section, methods based on ML and DL are introduced.

In the early stages, many scholars used traditional ML algorithms to classify weeds and crops. A typical ML-based weed detection technique involves five steps [24, 28, 29]: data acquisition, pre-processing, feature extraction, and classification. [30] reviewed recent plant image segmentation-based methods (from 2008 to 2015) and highlighted the advantages and disadvantages to colour index-based methods and threshold-based approaches.

Since means-based machine learning has low computing power and data quantity requirements, its results and visual features are easily understood, and consequently, it is the most commonly used weed detection method. The variety of visual characteristics, which differentiate between crops and weeds, can be divided into four categories: texture [31,32,33,34], colour [33, 35], shape [36], and spectrum feature [37,38,39,40,41,42].

Some unsupervised learning methods are also used in weed detection. A clustering approach without prior knowledge was proposed in [43] which eliminates the need for the system to be retrained for fields with different weed species. The similarity between weeds and crops, particularly during early growth, makes identification using a single feature impossible. Therefore, researchers use multi-feature fusion to identify weeds with great success. Consequently, the following subsection introduces multi-features used during the last 5 years.

Gai et al. [35] fused colour and deep images to detect and localise crops in their early growth stages. Ahmad et al. [31] combined edge orientation and shape matrix histograms using Sobel filters, and feature coverage for each cell to detect weeds during their early growth stages and achieved a classification accuracy of 98.40%.

Lin et al. [44] combined 11 features, including four spectral features, three space features, and three texture features, to identify eight plant species. Sabzi et al. [45] extracted eight texture features based on the gray level co-occurrence matrix (GLCM), two spectral texture descriptors, thirteen different colour features, five moment-invariant features, and eight shape features. Zou et al. [46••] extracted six features, including HOG, rotation-invariant local binary pattern (LBP), HU invariant moment, gray-level co-occurrence matrix (GLCM), and gray-level gradient go-occurrence matrix (GGCM). A classifier was then employed to identify crop seedlings and weeds.

As mentioned earlier, random forest (RF) [47], Bayesian decision [48], K-means [49], SVM [50, 51] and k-nearest neighbour (KNN) [52] have been widely used for weed and crop classification [53]. Other algorithms including naive Bayes [54], artificial neural networks(ANNs), and AdaBoost [31] have been used in weed detection. [55] compared the performances of ConvNets, SVM, RF, and AdaBoost. Results showed methods based on ConvNets achieved excellent results, with a higher than 98% accuracy in the classification of all classes. [56] proposed a system that performs vegetation detection, feature extraction, random forest classification, and smoothing, via a Markov random field to obtain accurate crop and weeds estimates. A weed detection system based on linear and quadratic classifiers was developed to target goldenrod weed in [57]. Experimental result showed that the proposed system has the potential as a target application to control goldenrod.

Designing features manually requires prior knowledge and expertise, which limits machine learning accuracy improvement. Furthermore, with the substantial increase in computing power and the availability of large amounts of training data, the neural network can independently learn features and automatically optimise the weights of each layer, significantly improving DL performance. Following recent DL achievements, it is logical to utilise it in weed detection using machine vision. Many classical neural network architectures, such as ResNet [58], DenseNet, and GoogleNet, have achieved state-of-the-art performance. According to whether the input data is in Euclidean space, deep learning is divided into two categories: convolutional neural networks(CNN) [59, 60•, 61] and graph convolutional networks (GNN) [62].

According to whether the data is labelled, deep learning can be divided into supervised learning, semi-supervised learning, and unsupervised learning. Several supervised and semi-supervised methods have recently emerged. Therefore, these two methods are introduced in the two following subsections.

Supervised learning is the current mainstream deep learning method used to detect weeds and crops. You et al. [63] proposed an improved semantic segmentation network integrated with a hybrid dilated convolutional layer and DropBlock to enlarge the receptive field and learn the robustness features. Lottes et al. [64] exploited a fully convolutional network integrating sequential information to encode the spatial arrangement of plants in a row using 3D convolutions over an image sequence. Hu et al. [65•] designed a graph-based deep learning architecture, namely Graph Weeds Net(GWN), which involves multi-scale graph representations which precisely characterise weed patterns. Zou et al. [46••] designed a simplified U-Net, which was trained on a synthesised training set. The F1-score achieved from the test set was 93.59%. Lottes et al. [66] presented a novel system using an end-to-end trainable fully convolutional network to estimate the stem location of weeds, which enables robots to perform precise mechanical treatment. In [67], three deep conventional neural architectures including DetectNet, GoogleNet, and VGGNet were evaluated for their weed detection capability using bermudagrass, and found VGGNet was most effective at detecting multiple broadleaf weed species at different growth stages.

Supervised deep learning methods require large training datasets with ground truth annotations, which require time and effort to build, consequently becoming the main obstacle to supervised learning. Unsupervised learning is an approach which does not need annotated images. The semi-supervised method, which needs a small amount of labelled data, is somewhere between supervised and unsupervised methods.

Pseudo-label is a process which uses a model trained on labelled data, to make predictions on unlabelled data, and filter samples based on predicted results, before re-entering them into the model for training. Zou et al. [46••] constructed a library of real in-situ plant images, which were then cut and randomly pasted onto a prepared soil image, with rotations, to build a synthetic image dataset which can be used to train neural architecture.

Lottes et al. [68] considered that as most crops are planted in rows, a small gap between the rows significantly reduces the ability to adapt a vision-based classifier to a new field. Based on the above, Bah et al. [18] and Louargant et al. [69] analysed crop rows to identify inter-row weed and crops, constructed a training dataset which does not require manual annotation, and then performed CNNs on it to build a model which is able to detect crop and weeds.

Shahbaz et al. [70] proposed a semi-supervised generative adversarial network (SGAN) for crops and weeds classification in early stages of growth, and achieved an average accuracy of 90% when 80% of the training data was unlabelled. Jiang et al. [71] proposed a CNN feature-based graph conventional network and combined the features of unlabelled vertices with nearby labelled ones; thus, the problem of weed and crop recognition was transferred to semi-supervised learning on a graph to reduce manual effort.

The agriculture robot or agribot is a robot used in agriculture. It is commonly believed that progress in robotics science and engineering may soon change the face of farming. Global spending and research into the subject are experiencing near exponential growth [72]. In this section, robots listed in Table 1 used commercially and in research within the last 5 years are summarised.

The Laser Weeder is designed for row crops in 200 to tens of thousands of acres. A single robot will weed 15–20 acres per day and replace several hand-weeding crews. The robots have undergone beta testing on specialty crop farms, and multiple crop fields, including broccoli and onions.

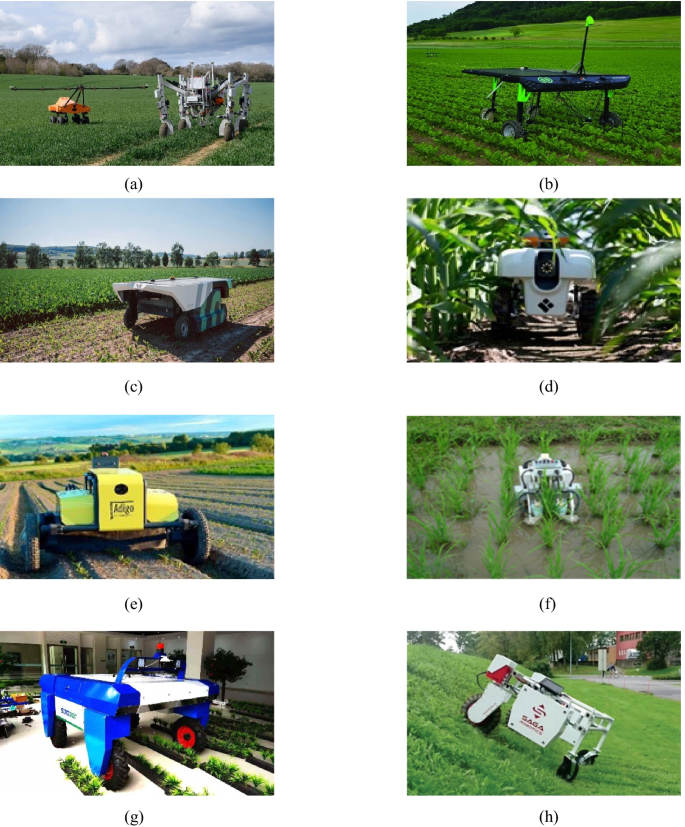

EcoRobotix [74] shown in Fig. 2b is a prototype designed in Switzerland for spot thinning and weeding. It is equipped with a computer system that identifies weeds. It sprays the detected weeds with a small dose of herbicide powered by a solar-powered battery mounted on the upper plane. According to the manufacturers of EcoRobotix, their prototype can reduce the volume of required herbicides by 20 times compared to conventional spray systems. A developed robot named AVO shown in Fig. 2c was designed to perform autonomous weeding operations in plane fields and row crops. Using machine learning, the robot detects and selectively sprays the weeds with a micro-dose of herbicide. The centimetre-precise detection and spraying reduces the herbicide volume by more than 95%, while ensuring crops are not sprayed, therefore preserving yield.

Franklin Robotics [73] designed a new gardening robot named Tertill. The sensors it is equipped with allow it to recognise weeds and cut them using small scissors. It uses solar energy as its power source and is waterproof, making it suitable for both gardens and farmland.

TerraSentia [78] developed a small agricultural robot, shown in Fig. 2d, for autonomous weed detection. Bonirob [81], developed by Bosch Deepfield Robotics, is credited with eliminating some of the most tedious tasks in modern farming, planting, and weeding. The autonomous robot is built to function as a mobile plant lab, able to decide which strains of plant are most able to survive insects and viruses, and how much fertiliser they need, before smashing any weeds with a ramming rod.

In the past 5 years, several papers on weeding robots have been published. According to our assessment, these robots are still a long way from being able to be applied practically.

Xiong et al. [82] developed a prototype robot equipped with a dual-gimbal. The robot was able to detect weeds in indoor environments, carry lasers with which to target weeds, and control the platform in real-time, realising continuous weeding. Tests indicated that with a laser traversal speed of 30 mm/s and a dwell time of 0.64 s per weed, the robot displayed a high hit rate of 97%.

Sujaritha et al. [83] designed a weed detecting robotic prototype using a Raspberry Pi micro-controller and suitable input/output subsystems such as cameras, small light sources, and powered motors. The prototype correctly identified sugarcane crop among nine different weed species based on rotation invariant and scale invariant texture analysis methods with a fuzzy real-time classifier. The system detects weeds with 92.9% accuracy, with a processing time of 0.02 s.

Utstumo et al. [84] demonstrated an autonomous robot platform Adigo, shown in Fig. 2e. The proposed robot was designed with the specific task of Drop on Demand herbicide application. The robot effectively controlled all weeds in the field trial with a ten-fold reduction in herbicide use.

The Ladybird was designed by the University of Sydney. As indicated, it looks like a ladybird, shading the vision system on the underside of the robot, and preventing it from being affected by light conditions. The wings can be raised or lowered to accommodate varying crop height. To control weeds in the field, the Ladybird robot was fitted with a spraying end actuator attached to a 6-axis robotic arm. When the machine learning algorithm identifies a weed, the co-ordinates of the weed are transferred to the intelligent robotic system, which then positions itself directly over the weed. Once in position, a small and controllable volume of herbicide spray is fired at the weed exactly where it is required. Sydney University also proposed a smaller robot named RIPPA which is the prototype for the commercial version.

Bawden et al. [77] developed a modular weeding robot called AgBotII. It identifies crops and weeds using LBP and covariance features in its image processing stage and removes weeds using three different tools: an arrow-shaped hoe, a toothed tool, and a cutting tool. This platform can already be used in commercial applications. In order to comprehensively utilise the advantages of multiple robots, Pretto et al. [85] provided an adaptive solution by combining the aerial survey capabilities of a small, autonomous UAV with a multi-purpose agricultural unmanned ground vehicle.

Hitoshi Sori et al. [79] purposes a weeding robot shown in Fig. 2f that can navigate autonomously while weeding robot a paddy field. The weeding robot removes the weeds by churning up the soil and inhibits the growth of the weeds by blocking-off sunlight. Thorvald, shown in Fig. 2h is a number of different robots rolled into one, all built using the same basic modules, and rebuilt using only basic hand tools. A protype robot named Sinobot was designed for weeding. As shown in Fig. 2g, the prototype, equipped with four independently steered wheels, can automatically plan routes and support the remote control.

After discussing various existing weeder robots and weed detection methods, data were collected for analysis. Therefore, this section discusses the research trends, common difficulties, and finally, the desirable prerequisites for mechanical weeder robots.

In actual production environments, to take full advantage of the technologies, robots that can run 24/7 are required, so weed recognition methods need to adapt to a variety of working environments, particularly different lighting and weather conditions. This necessitates higher requirements on data pre-processing methods and sensors.

Plants grow randomly, which presents several issues, including occlusion. During actual weeding operation, in order to achieve better results, we need to avoid crops that shade other plants and only operate on weeds that are occluded. Therefore, based on weed identification, we need to distinguish the occlusion relationship between plants. Dyrmann et al. [89] used a fully conventional neural network to detect single weeds in cereal fields despite heavy leaf occlusion.

Large-scale datasets are essential for developing high performance and robust deep learning models. Table 2 lists several common datasets compiled within the last 5 years which are related to the field of weed detection and identification. A lack of large datasets has limited the development of advanced methods applicable in a large variety of fields and prevented the transformation into commercial viability. Therefore, constructing large-scale datasets with diverse and complex conditions to facilitate practical deployment are in high demand. Xie et al. [90•] and Gao et al. [91] proposed algorithms to generate high-fidelity synthetic data, and combined synthetic data with raw data to train the network. The results showed that the proposed method can effectively overcome the impact of imprecise and insufficient training samples [92]. presented an approach which uses real-world textures to create an explicit model of the target environment and generate a large variety of annotated data. This proposed approach removes the need for human intervention in the labelling phase, and reduces the time and effort needed to train a visual inference model.

Table 2 The list of publicly available datasets published within 5 yearsSince building large datasets is extremely costly, we urgently need a method which reduces the cost of annotation. Many state-of-the-art methods and algorithms have been proposed to solve different tasks, and some can be used to automatically label, and manually collate the labelled data. A self-supervised method was proposed in [100] to automatically generate training data using a row detection and extraction method. Shorewala et al. [101] classified pixels on unlabelled images that are similar to each other, according to a response map, into two clusters (vegetation and background) based on an unsupervised deep learning-based segmentation network. R. Sheikh et al. [102•] used k-means to determine 20 cluster categories from 10 randomly selected images and then manually determined which category represents vegetation to obtain the pseudo ground truth. Furthermore, Sheikh compared three sample selection methods based on loss, the L2 norm of gradients, and gradient projection, with random samples, as well as entropy, and found the suggested methods had a higher semantic segmentation accuracy with a few training samples.

Transfer learning methods aim to apply knowledge and skills learned in previous domains/tasks to novel domains/tasks. According to the target task, the examples in the source domain that are useful to the target domain are given new weights to ensure the improved source domain is close to the distribution of the target domain, and a reliable algorithm is obtained from the improved domain. In deep learning, the typical transfer learning method is to fine-tune models trained on other similar tasks to achieve enhanced results, and transfer learning has demonstrated a very promising classification performance under varying ambient light conditions [103]. Bosilj et al. [104•] explored the role of knowledge transfer between deep-learning-based classifiers with different crop types, with the goal of reducing retraining time and labelling effort, required for a new crop. Results show that even when the data used for retraining is imperfectly annotated, the classification performance is within 2% of that of networks trained with laboriously annotated pixel-precision data.

As annotating manually can be both costly and inaccurate, an algorithm that identifies, while requiring only a small number of, or even no, annotations, is needed. In [105], Zhan et al. utilised a self-supervised method to learn occlusion order and solve the inconsistency of invisible parts using multiple annotators.

Traditional and deep learning methods should be combined to improve the level of weed detection. Traditional methods use a small volume of labelled data to locate a usable classification criterion. However, traditional methods necessitate hand-crafted features that come from prior knowledge, which limits their performance. Asad et al. [106] used maximum likelihood classification to segment the background and foreground, and a semantic segmentation model to detect weeds.

Multi-robot systems (MRS) are a group of robots that are designed to perform some collective behaviours, making some goals that are impossible for a single robot to achieve become feasible and attainable [107]. Compared with a single robot, swarm robots can improve weeding efficiency. In addition, MRS have some other advantages. Firstly, they have a high fault tolerance rate, where one robot error does not cause the entire system to crash. Secondly, the swarm robot has a stronger adaptability to the environment, and the swarm algorithm works according to the optimal plan. For multi-robot systems to become practical, we need coordination algorithms that can scale up to large teams of robots dealing with dynamically changing, failure-prone, contested, and uncertain environments [108, 109]. Bechar et al. [110] presented three strategies for coordinated multi-agent weeding under the conditions of partial environmental information.

Robots or intelligent automation systems are generally highly complex since they consist of several different sub-systems which must be integrated and correctly synchronised to perfectly perform tasks as a whole, and to successfully transfer the required information [110]. Modularisation refers to the decomposition of robots into mutually independent parts or breaking cluster systems into different robots. The goal is to reduce build time and cost to a minimum, as this will enable low cost swarms of high-quality robots [111].

Evidence of negative environmental impacts from herbicides is growing, and herbicide resistance is increasingly prevalent [112]. Furthermore, with few new herbicides pending release, no new mechanism of action in 30 years, and an increasing number of herbicide-resistant weeds, the need for new weed control tools is overwhelming [113]. Thus, ways to minimise crop damage and pesticide dosage when removing weeds, and improving weeding efficiency through actuator design, will become the focus of future development. McCool et al. [9] compared the effect of various mechanical tools including arrow hoe, tine, and whipper-snipper (W/S). Results showed W/S had better cottonweed weeding efficacy, tine performed better with Feathertop Rhodes, and arrow hoe worked better with wild oats. Furthermore, other methods such as infrared, laser [75], and microwave weeding need development.

The system must be developed to overcome difficult problems such as continuously changing conditions, variability of the produce and environment, and hostile environmental conditions such as vibration, dust, extreme temperature, and humidity. Even though much effort has been put into developing obstacle detection and avoidance algorithms and systems, this is still at the research stage [110]. Multi-sensor fusion has become a popular technology to improve recognition accuracy. According to [114], vision systems need not be a bottleneck in the detection of items with high-end GPUs.

This review summarises the current status of robotic approaches to mechanical weeding. Accurate weed detection is a prerequisite in weed management. Two weed detection technology categories are discussed in “Weed Detection Methods.” Consequently, deep neural network architectures deliver better performance and enable quicker application development. Weed recognition still has several issues, including light changes, irregular growth, severe occlusion, and difficulty in early recognition. Therefore, a dataset containing the above situations is required. More hybrid models using deep learning and traditional image processing are expected to be developed in the future. Another trend is to reduce the annotation effect using self-supervised methods.

Commercial weeding machineries are emerging onto the market, but most of them only target a few specific crops and weeds. Designing a robot, which can cope with a variety of scenarios and can be adjusted to quickly adapt to new environments, is a priority. Currently, the high cost of these robotic weeding systems hinders further commercialisation. How to reduce the cost and improve the efficiency of weeding has become the focus of current research.

This work is funded by Shanghai Agricultural and Rural Committee, and the project number is 202002080009F01466.